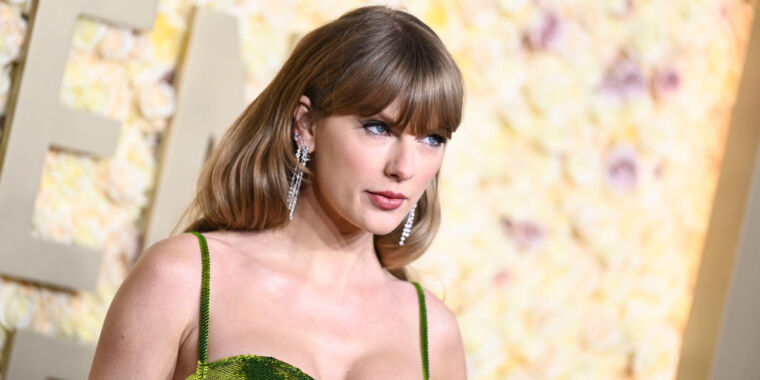

After explicit, fake AI images of Taylor Swift began spreading on X, the platform formerly known as Twitter has attempted to block all searches for the pop star.

“This is a temporary action and done with an abundance of caution as we prioritize safety on this issue,” Joe Benarroch, X’s head of business operations, said in a statement to Reuters.

However, even this drastic step does not seem to be an effective solution, as “Swift” was trending Monday morning on X. The temporary block also does nothing to stop searches using misspellings of the singer’s name.

X did not immediately respond to Ars’ request to comment.

The harmful images first appeared last week on X after leaking from a toxic Telegram channel where users discussed ways to use popular AI tools like Microsoft’s Designer to generate non-consensual intimate imagery of celebrities, 404 Media found. Like the X search workarounds, misspellings and keyword hacks were used to evade Designer’s content filters. Ars also found a Reddit thread where users were discussing using Designer to generate sexualized “body paint” images very similar to some of the AI Swift images that went viral.

Last week, Microsoft told Ars that it was investigating, but so far, the company has not confirmed reports that Designer was used to generate the Swift images. That hasn’t stopped Microsoft from also taking drastic steps and heavily cracking down on Designer’s content filters, though.

According to 404 Media, Telegram and 4chan users were disappointed to find this week that Microsoft introduced more safeguards in Designer, seemingly blocking any celebrity’s face from being generated by the tool. Now, keywords that previously worked to generate images of stars like Ariana Grande or Zendaya now just show “generic girls” with similar looks, users said, complaining that “Microsoft Designer got hella patched” and it’s “almost like it won’t generate celebs anymore.”

On Tuesday, Microsoft CEO Satya Nadella will appear on NBC Nightly News to discuss the role its tools may have played in generating the AI porn of Swift. Millions viewed some of these images before content removals kicked in, with one of the most widely shared images receiving 47 million views, The New York Times reported.

Ars was sent a transcript from the upcoming NBC broadcast, where Nadella will confirm that Microsoft plans to “act fast” to reduce harms of AI tools like Designer and Bing, which can be used to generate images of anything users can imagine through text prompts.

“I think, first of all, absolutely this is alarming and terrible, and so therefore yes, we have to act,” Nadella told NBC.

Nadella said that “there’s a lot to be done and a lot being done” at Microsoft to place guardrails around AI technologies to prevent harmful outputs, but what’s also needed is a global and societal “convergence on certain norms,” like everyone reaching a consensus that non-consensual AI imagery is harmful. Currently, not every platform agrees on that. Together with law enforcement, platforms “can govern a lot more than we think,” Nadella told NBC.